GPFS stands for General Parallel File System, which is a cluster file system developed by IBM, know as the IBM Storage Scale. It allows simultaneous read and write access to a file system or set of file systems from multiple nodes at the same time.

In the last article, we had showed you how to install and configure GPFS file system in RHEL system, today we will show you how to create GPFS cluster file system on RHEL, including NSD stanzafile creation, NSD Disk creation, GPFS file system creation and mounting it

If you are new to GPFS, I recommend reading the GPFS series article listed below:

- Part-1 How to Install and Configure GPFS Cluster on RHEL

- Part-2 How to Create a GPFS Filesystem on RHEL

- Part-3 How to Extend a GPFS Filesystem on RHEL

- Part-4 How to Export a GPFS Filesystem in RHEL

- Part-5 How to Upgrade GPFS Cluster on RHEL

1) Disk Addition

Get a LUN ID’s from the Storage Team and scan the SCSI disks (On both the Nodes).

# for host in `ls /sys/class/scsi_host`; do echo "Scanning $host...Completed"; echo "- - -" > /sys/class/scsi_host/$host/scan; done

After the scan, check if the given LUNs are discovered at the OS level.

# lsscsi --scsi --size | grep -i [Last_Five_Digit_of_LUN]

2) Creating NSD StanzaFile

The mmcrnsd command is used to create cluster-wide names for Network Shared Disks (NSDs) used by GPFS. To do so, you must define and prepare each physical disk that you want to use with GPFS as a Network Shared Disk (NSD). The NSD stanzafile contains the properties of the disks to be created. This file can be updated as needed with the mmcrnsd command and can be provided as input to the mmcrfs, mmadddisk, or mmrpldisk command.

The names generated by the mmcrnsd command are necessary because disks attached to multiple nodes might have different disk device names on each node. The NSD names identify each disk uniquely. This command should be run for all disks used in GPFS file systems.

A unique NSD volume ID is written to the disk to identify that a disk has been processed by the mmcrnsd command. All of the NSD commands (mmcrnsd, mmlsnsd and mmdelnsd) use this unique NSD volume ID to identify and process NSDs. After NSDs are created, GPFS cluster data is updated and available for use by GPFS.

NSD stanzafile Syntax:

Here are the common parameters used in NSD stanzafile.

%nsd:

device=DiskName

nsd=NsdName

servers=ServerList

usage={dataOnly | metadataOnly | dataAndMetadata | descOnly | localCache}

failureGroup=FailureGroup

pool=StoragePool

thinDiskType={no | nvme | scsi | auto}

Where:

- device=DiskName – Block device name that you want to define as an NSD.

- nsd=NsdName – Specifies the name of the NSD to be created. This name must not already be used as another GPFS disk name, and it must not begin with the reserved string ‘gpfs’.

- servers=ServerList – Specifies NSD servers seperate by comma. Define NSDs in primary and secondary orders. It supports up to eight NSD servers in this list.

- usage – Specifies the type of data to be stored on the disk. The ‘dataAndMetadata’ is the default for disks in the system pool, which indicates that the disk contains both data and metadata.

- failureGroup – Identifies the failure group to which the disk belongs. The default is -1, which indicates that the disk has no point of failure in common with any other disk.

- pool=StoragePool – Specifies the name of the storage pool to which the NSD is assigned. The default value for pool is system.

- thinDiskType – Specifies the space reclaim disk type

Check if the disk reservation policy is set to 'NO reservation‘ using the sg_persist command. If not, make the necessary changes at VMWare or Storage level.

# sg_persist -r /dev/sdd EMC SYMMETRIX 5100 Peripheral device type: disk PR geeration=0x1, there is NO reservation held

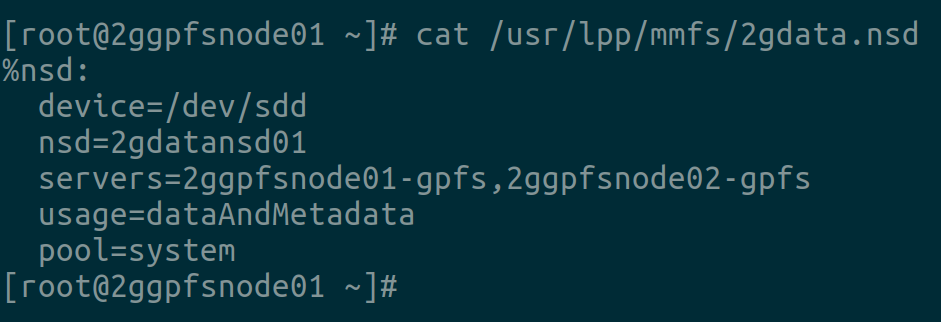

This is a sample NSD stanzafile created to add a data disk to illustrate this article. Let’s use '2gdata.nsd' as a filename and it will be placed under '/usr/lpp/mmfs'.

# cat /usr/lpp/mmfs/2gdata.nsd %nsd: device=/dev/sdd nsd=2gdatansd01 servers=2ggpfsnode01-gpfs,2ggpfsnode02-gpfs usage=dataAndMetadata pool=system

Make a Note: It is not mandatory to include all the parameters, so only the required one should be added, the rest of the parameters will use the default value if any.

3) Creating Network Shared Disk (NSD)

The mmcrnsd command is used to create cluster-wide names for NSDs used by GPFS.

# mmcrnsd -F /usr/lpp/mmfs/2gdata.nsd mmcrnsd: Processing disk sdd mmcrnsd: Propagating the cluster configuration data to all affected nodes. This is an asynchronous process.

After NSD generation, the ‘2gdata.nsd’ file is rewritten and looks like below.

# cat /usr/lpp/mmfs/2gdata.nsd # /dev/sdd:2ggpfsnode01-gpfs:2ggpfsnode02-gpfs:dataAndMetadata::2gdatansd01:system 2gdatansd01:::dataAndMetadata:-1::system

Run mmlsnsd command to check NSD status. It will show the disk name you created and filesystem will show 'free disk' because we didn’t create it.

# mmlsnsd File system Disk name NSD servers ------------------------------------------------------------------------------- (free disk) 2gdatansd01 2ggpfsnode01-gpfs,2ggpfsnode02-gpfs (free disk) tiebreak1 (directly attached) (free disk) tiebreak2 (directly attached) (free disk) tiebreak3 (directly attached)

4) Creating GPFS Filesystem

Use the mmcrfs command to create a GPFS file system. By default, GPFS starting from 5.0 creates a file system with a '4 MiB' block size and an '8 KiB' subblock size for good performance, but the block size can be changed depending on the application workload using the mmcrfs command with the option '-B'.

Make a Note: The block size can also be configured during NSD creation.

Syntax:

mmcrfs [Mount_Point_Name] [FS_Name] -F [Path_to_StanzaFile]

Execute the following command to create a GPFS file system.

# mmcrfs /GPFS/2gdata 2gdatalv -F /usr/lpp/mmfs/2gdata.nsd

The following disks of 2gdatalv will be formated on node 2ggpfsnode01:

2gdatansd01: size 10241 MB

Formatting file system ...

Disks up to size 106.74 GB can be added to storage pool system.

Creating Inode File

Creating Allocation Maps

Creating Log Files

Creating Inode Allocation Map

Creating Block Allocation Map

Formatting Allocation Map for storage pool system

Completed creation of file system /dev/2gdatalv.

mmcrfs: Propagating the cluster configuration data to all

affected nodes. This is an asynchronous process.

Use the mmlsfs command to list the attributes of a file system. To check the FS information of '2gdatalv', run: This will show you a lot of information.

# mmlsfs 2gdatalv

Use the necessary switches to get only the required information of the '2gdatalv' file system. To filter only inode size, block size, subblock size, run:

# mmlsfs 2gdatalv -i -B -f -V -d -T flag value description --------------- ----------------------- -------------------------- -i 4096 Indoe size in bytes -B 4194304 Block size -f 8192 Minimum fragment (subblock) size in bytes -V 30.00 (5.1.8.0) File system version -d 2gdatansd01 Disks in file system -T /GPFS/data default mount point

5) Mounting GPFS File System

The mmmount command mounts the specified GPFS file system on one or more nodes in the cluster. If no nodes are specified, the file systems are mounted only on the node from which the command was issued. Use the '-a' switch to mount the GPFS file system on all system in the cluster simultaneously.

# mmmount 2gdatalv -a Thu May 11 11:38:09 +04 2023: mmmount: Mounting file systems ... 2ggpfsnode01-gpfs: 2ggpfsnode02-gpfs:

If you want to mount multiple file system simultaneously, run:

# mmmount all -a

Finally check the mounted file system using df command as shown below:

# df -hT | (IFS= read -r header; echo $header; grep -i gpfs) | column -t Filesystem Type Size Used Avail Use% Mounted on 2gdatalv GPFS 10G 1.4G 8.7G 14% /GPFS/2gdata

Bonus Tips:

After the GPFS file system creation, if you run the ‘mmlsnsd’ command, you can see the file system information as shown below.

# mmlsnsd File system Disk name NSD servers ----------------------------------------------------------------------- 2gdatalv 2gdatansd01 2ggpfsnode01-gpfs,2ggpfsnode02-gpfs (free disk) tiebreaker1 (directly attached) (free disk) tiebreaker2 (directly attached) (free disk) tiebreaker3 (directly attached)

Similarly, you can find the file system information in the ‘mmlsconfig’ command output as shown below:

# mmlsconfig Configuration data for cluster 2gtest-cluster.2ggpfsnode01-gpfs: ---------------------------------------------------------------- clusterName 2gtest-cluster.2ggpfsnode01-gpfs clusterId 6339012640885012929 autoload yes dmapiFileHandleSize 32 minReleaseLevel 5.1.8.0 tscCmdAllowRemoteConnections no ccrEnabled yes cipherList AUTHONLY sdrNotifyAuthEnabled yes tiebreakerDisks tiebreaker1;tiebreaker2;tiebreaker3 autoBuildGPL yes pagepool 512M adminMode central File systems in cluster 2gtest-cluster.2ggpfsnode01-gpfs: --------------------------------------------------------- /dev/2gdatalv

Final Thoughts

I hope you learned how to create a GPFS Cluster File System on a RHEL system.

In this article, we covered GPFS Cluster file system creation, NSD creation and mounting GPFS file system in RHEL.

If you have any questions or feedback, feel free to comment below.